-

The deep rabbit hole of DIY small ergonomic keyboards

READ MORE

-

Comparing GPT-4, 3.5, and some offline local LLMs at the task of generating flashcards for spaced repetition (e.g., Anki)

tldr: I used GPT-4 Turbo, GPT-3.5 Turbo, and two open-source offline LLMs to create flashcards for a spaced repetition system (Anki) on a mathematical topic; I rated the 100 LLM-suggested flashcards (i.e., question-answer pairs) along the dimensions of truthfulness, self-containment, atomicity, whether the question-answer makes sense as a flashcard, and whether I would include a similar flashcard in my deck; I analyzed and compared the different LLMs’ performance based on all of that; then crowned the winner LLM :crown: or maybe not… And, because the blog post ended up being long and detailed, here is a figure combining all of the final results:

READ MORE -

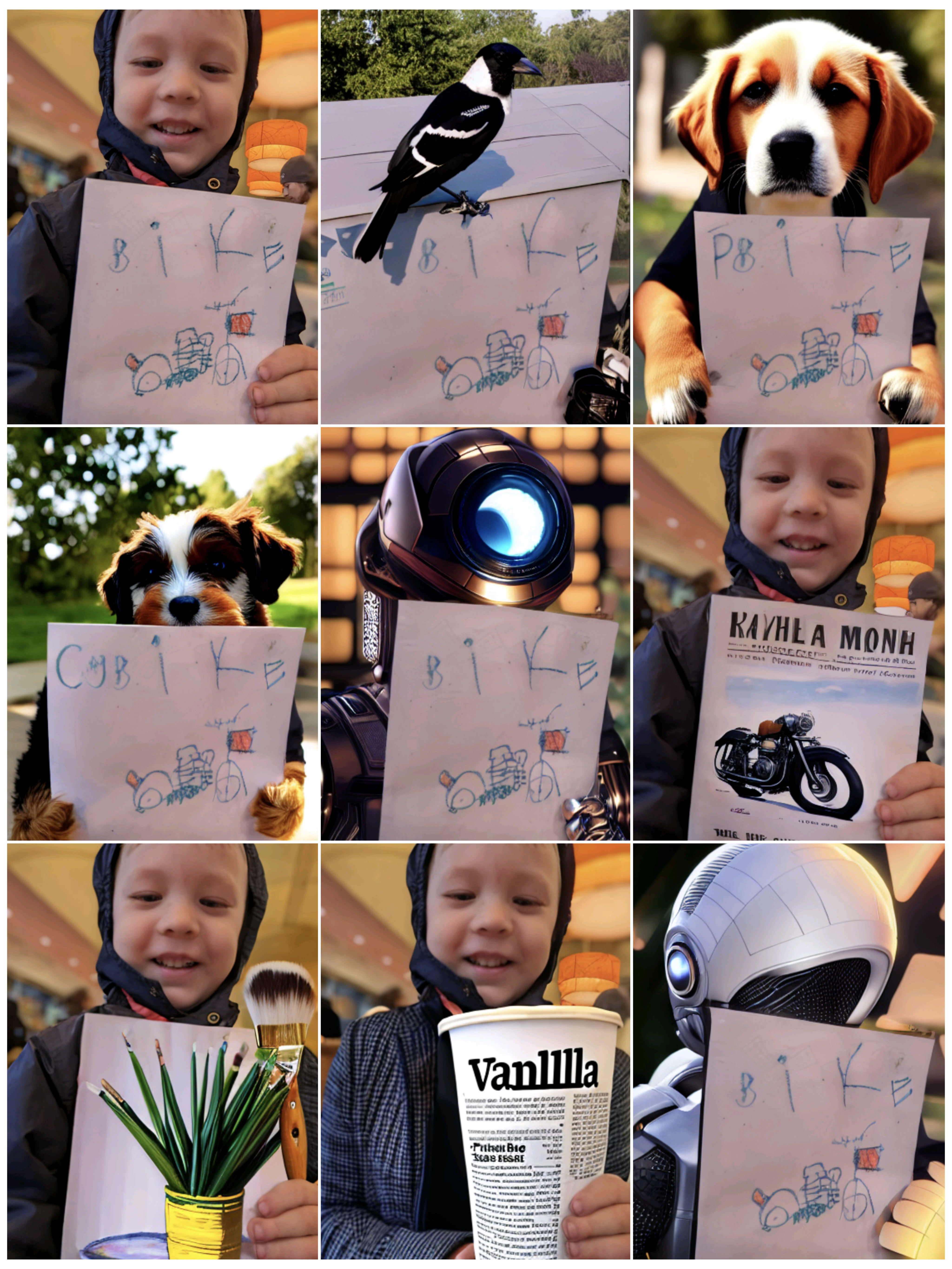

Having some fun with Stable Diffusion Inpainting in Python on New Year's Day

READ MORE

-

Concordance Correlation Coefficient

If we collect \(n\) independent pairs of observations \((y_{11}, y_{12}), (y_{21}, y_{22}), \dots, (y_{n1}, y_{n2})\) from some bivariate distribution, then how can we estimate the expected squared perpendicular distance of each such point in the 2D plane from the 45-degree line?

READ MORE -

From conditional probability to conditional distribution to conditional expectation, and back

I can’t count how many times I have looked up the formal (measure theoretic) definitions of conditional probability distribution or conditional expectation (even though it’s not that hard :weary:) Another such occasion was yesterday. This time I took some notes.

READ MORE -

Setting up an HTTPS static site using AWS S3 and Cloudfront (and also Jekyll and s3_website)

For a while now I wanted to migrate my websites away from Github pages. While Github provides an excellent free service, there are some limitations to its capabilities, and the longer I wait the harder (or the more inconvenient) it becomes to migrate away from gh-pages. AWS S3 + CloudFront is a widely-used alternative that has been around for a long time. Moreover, I was planning to get more familiar with AWS at all levels anyway. So, it’s a great learning opportunity too.

READ MORE -

Neural networks and deep learning - self-study and 2 presentations

Last month, after mentioning “deep learning” a few times to some professors, I suddenly found myself in a position where I had to prepare three talks about “deep learning” within just one month… :sweat_smile: This is not to complain. I actually strongly enjoy studying the relevant theory, applying it to interesting datasets, and presenting what I have learned. Besides, teaching may be the best way to learn. However, it is quite funny. :laughing: The deep learning hype is too real. :trollface:

READ MORE -

Probabilistic interpretation of AUC

Unfortunately this was not taught in any of my statistics or data analysis classes at university (wtf it so needs to be :scream_cat:). So it took me some time until I learned that the AUC has a nice probabilistic meaning.

READ MORE -

Mining USPTO full text patent data - An exploratory analysis of machine learning and AI related patents granted in 2017 so far

The United States Patent and Trademark office (USPTO) provides immense amounts of data (the data I used are in the form of XML files). After coming across these datasets, I thought that it would be a good idea to explore where and how my areas of interest fall into the intellectual property space; my areas of interest being machine learning (ML), data science, and artificial intelligence (AI).

READ MORE -

Freedman's paradox

Recently I came across the classical 1983 paper A note on screening regression equations by David Freedman. Freedman shows in an impressive way the dangers of data reuse in statistical analyses. The potentially dangerous scenarios include those where the results of one statistical procedure performed on the data are fed into another procedure performed on the same data. As a concrete example Freedman considers the practice of performing variable selection first, and then fitting another model using only the identified variables on the same data that was used to identify them in the first place. Because of the unexpectedly high severity of the problem this phenomenon became known as “Freedman’s paradox”. Moreover, in his paper Freedman derives asymptotic estimates for the resulting errors.

READ MORE

- ← Newer Posts

- Page 1 of 5

- Older Posts →